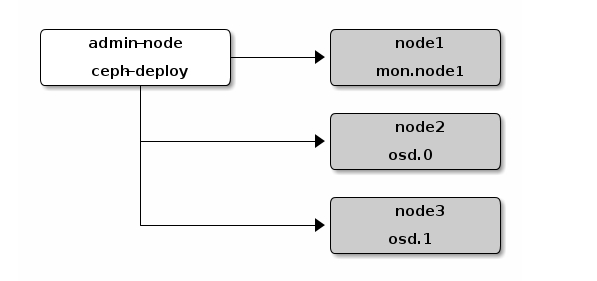

集群架构:

环境:

10.200.51.4 admin、osd、mon 作为管理和监控节点

10.200.51.9 osd、mds

10.200.51.10 osd、mds

10.200.51.113~client 节点

ceph1 作为管理,osd.mon 节点。前三台新增硬盘

[root@ceph1 ~]# mkfs.xfs /dev/sdb

meta-data=/dev/sdb isize=512 agcount=4, agsize=1310720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242880, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ceph1 ~]# mkdir /var/local/osd{0,1,2}

[root@ceph1 ~]# mount /dev/sdb /var/local/osd0/

[root@ceph2 ~]# mkfs.xfs /dev/sdb

meta-data=/dev/sdb isize=512 agcount=4, agsize=1310720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242880, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ceph2 ~]# mkdir /var/local/osd{0,1,2}

[root@ceph2 ~]# mount /dev/sdb /var/local/osd1/

[root@ceph3 ~]# mkfs.xfs /dev/sdb

meta-data=/dev/sdb isize=512 agcount=4, agsize=1310720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242880, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ceph3 ~]# mkdir /var/local/osd{0,1,2}

[root@ceph3 ~]# mount /dev/sdb /var/local/osd2/

10.200.51.4 ceph1

10.200.51.9 ceph2

10.200.51.10 ceph3

10.200.51.113 ceph4

echo -e "192.168.51.206 ceph1\n192.168.51.207 ceph2\n192.168.51.208 ceph3\n192.168.51.209 ceph4\n192.168.51.212 controller\n192.168.51.211 cinder\n192.168.51.210 computer" >> /etc/hosts

ssh-keygen

ssh-copy-id ceph1

ssh-copy-id ceph2

ssh-copy-id ceph3

ssh-copy-id ceph4

[root@ceph1 ~]# yum install ntp -y

[root@ceph1 ~]# systemctl start ntpd

[root@ceph2 ~]# ntpdate 10.200.51.4

[root@ceph3 ~]# ntpdate 10.200.51.4

或者所有节点配置统一互联网 ntp 服务

ntpdate ntp1.aliyun.com

[ceph]

name=ceph

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/

gpgcheck=0

priority=1

[ceph-noarch]

name=ceph noarch

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/

gpgcheck=0

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/SRPMS

gpgcheck=0

priority=1

yum clean all

yum makecache

yum -y install ceph-deploy

mkdir /etc/ceph && cd /etc/ceph

ceph-deploy new ceph1

[root@ceph1 ceph]# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

Ceph 配置文件、一个 monitor 密钥环和一个日志文件

修改副本数

#配置文件的默认副本数从 3 改成 2,这样只有两个 osd 也能达到 active+clean 状态,把下面这行加入到[global]段(可选配置),参考这里

[root@ceph1 ceph]# vim ceph.conf

[global]

fsid = 94b37984-4bf3-44f4-ac08-3bb1a638c771

mon_initial_members = ceph1

mon_host = 10.200.51.4

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd_pool_default_size = 2

[root@ceph1 ceph]# ceph-deploy install ceph1 ceph2 ceph3 ceph4

完成后:

[root@ceph1 ceph]# ceph --version

ceph version 10.2.11 (e4b061b47f07f583c92a050d9e84b1813a35671e)

[root@ceph1 ceph]# ceph-deploy mon create ceph1

[root@ceph1 ceph]# ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph1.asok mon_status

{

"name": "ceph1",

"rank": 0,

"state": "leader",

"election_epoch": 3,

"quorum": [

0

],

"outside_quorum": [],

"extra_probe_peers": [],

"sync_provider": [],

"monmap": {

"epoch": 1,

"fsid": "0fe8ad12-6f71-4a94-80cc-2d19a9217b4b",

"modified": "2019-10-31 01:50:50.330750",

"created": "2019-10-31 01:50:50.330750",

"mons": [

{

"rank": 0,

"name": "ceph1",

"addr": "10.200.51.4:6789\/0"

}

]

}

}

[root@ceph1 ceph]# ceph-deploy gatherkeys ceph1

添加 osd 节点

之前创建的目录/var/local/osd{id}

[root@ceph1 ceph]# ceph-deploy osd prepare ceph1:/var/local/osd0 ceph2:/var/local/osd1 ceph3:/var/local/osd2

chmod 777 -R /var/local/osd0/

chmod 777 -R /var/local/osd1/

chmod 777 -R /var/local/osd2/

[root@ceph1 ceph]# ceph-deploy osd activate ceph1:/var/local/osd0 ceph2:/var/local/osd1 ceph3:/var/local/osd2 ceph4:/var/local/osd3

[root@ceph1 ceph]# ceph-deploy osd list ceph1 ceph2 ceph3

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.39): /usr/bin/ceph-deploy osd list ceph1 ceph2 ceph3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f0d07370290>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f0d073be050>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : [('ceph1', None, None), ('ceph2', None, None), ('ceph3', None, None)]

[ceph1][DEBUG ] connected to host: ceph1

[ceph1][DEBUG ] detect platform information from remote host

[ceph1][DEBUG ] detect machine type

[ceph1][DEBUG ] find the location of an executable

[ceph1][DEBUG ] find the location of an executable

[ceph1][INFO ] Running command: /bin/ceph --cluster=ceph osd tree --format=json

[ceph1][DEBUG ] connected to host: ceph1

[ceph1][DEBUG ] detect platform information from remote host

[ceph1][DEBUG ] detect machine type

[ceph1][DEBUG ] find the location of an executable

[ceph1][INFO ] Running command: /usr/sbin/ceph-disk list

[ceph1][INFO ] ----------------------------------------

[ceph1][INFO ] ceph-0

[ceph1][INFO ] ----------------------------------------

[ceph1][INFO ] Path /var/lib/ceph/osd/ceph-0

[ceph1][INFO ] ID 0

[ceph1][INFO ] Name osd.0

[ceph1][INFO ] Status up

[ceph1][INFO ] Reweight 1.0

[ceph1][INFO ] Active ok

[ceph1][INFO ] Magic ceph osd volume v026

[ceph1][INFO ] Whoami 0

[ceph1][INFO ] Journal path /var/local/osd0/journal

[ceph1][INFO ] ----------------------------------------

[ceph2][DEBUG ] connected to host: ceph2

[ceph2][DEBUG ] detect platform information from remote host

[ceph2][DEBUG ] detect machine type

[ceph2][DEBUG ] find the location of an executable

[ceph2][INFO ] Running command: /usr/sbin/ceph-disk list

[ceph2][INFO ] ----------------------------------------

[ceph2][INFO ] ceph-1

[ceph2][INFO ] ----------------------------------------

[ceph2][INFO ] Path /var/lib/ceph/osd/ceph-1

[ceph2][INFO ] ID 1

[ceph2][INFO ] Name osd.1

[ceph2][INFO ] Status up

[ceph2][INFO ] Reweight 1.0

[ceph2][INFO ] Active ok

[ceph2][INFO ] Magic ceph osd volume v026

[ceph2][INFO ] Whoami 1

[ceph2][INFO ] Journal path /var/local/osd1/journal

[ceph2][INFO ] ----------------------------------------

[ceph3][DEBUG ] connected to host: ceph3

[ceph3][DEBUG ] detect platform information from remote host

[ceph3][DEBUG ] detect machine type

[ceph3][DEBUG ] find the location of an executable

[ceph3][INFO ] Running command: /usr/sbin/ceph-disk list

[ceph3][INFO ] ----------------------------------------

[ceph3][INFO ] ceph-2

[ceph3][INFO ] ----------------------------------------

[ceph3][INFO ] Path /var/lib/ceph/osd/ceph-2

[ceph3][INFO ] ID 2

[ceph3][INFO ] Name osd.2

[ceph3][INFO ] Status up

[ceph3][INFO ] Reweight 1.0

[ceph3][INFO ] Active ok

[ceph3][INFO ] Magic ceph osd volume v026

[ceph3][INFO ] Whoami 2

[ceph3][INFO ] Journal path /var/local/osd2/journal

[ceph3][INFO ] ----------------------------------------

[root@ceph1 ceph]# ceph-deploy admin ceph1 ceph2 ceph3

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.39): /usr/bin/ceph-deploy admin ceph1 ceph2 ceph3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7feff2220cb0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph1', 'ceph2', 'ceph3']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7feff2f39a28>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph1

[ceph1][DEBUG ] connected to host: ceph1

[ceph1][DEBUG ] detect platform information from remote host

[ceph1][DEBUG ] detect machine type

[ceph1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph2

[ceph2][DEBUG ] connected to host: ceph2

[ceph2][DEBUG ] detect platform information from remote host

[ceph2][DEBUG ] detect machine type

[ceph2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph3

[ceph3][DEBUG ] connected to host: ceph3

[ceph3][DEBUG ] detect platform information from remote host

[ceph3][DEBUG ] detect machine type

[ceph3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

各节点修改 ceph.client.admin.keyring 权限:

chmod +r /etc/ceph/ceph.client.admin.keyring

[root@ceph1 ceph]# ceph health

HEALTH_OK

[root@ceph1 ceph]# ceph-deploy mds create ceph2 ceph3

查看集群状态:

[root@ceph1 ceph]# ceph mds stat

e4:, 3 up:standby

[root@ceph1 ceph]# ceph -s

cluster 0fe8ad12-6f71-4a94-80cc-2d19a9217b4b

health HEALTH_OK

monmap e1: 1 mons at {ceph1=10.200.51.4:6789/0}

election epoch 3, quorum 0 ceph1

osdmap e15: 3 osds: 3 up, 3 in

flags sortbitwise,require_jewel_osds

pgmap v25: 64 pgs, 1 pools, 0 bytes data, 0 objects

15681 MB used, 45728 MB / 61410 MB avail

64 active+clean

首先查看:

[root@ceph1 ceph]# ceph fs ls

No filesystems enabled

[root@ceph1 ceph]# ceph osd pool create cephfs_data 128

pool 'cephfs_data' created

[root@ceph1 ceph]# ceph osd pool create cephfs_metadata 128

pool 'cephfs_metadata' created

[root@ceph1 ceph]# ceph fs new 128 cephfs_metadata cephfs_data

new fs with metadata pool 2 and data pool 1

[root@ceph1 ceph]# ceph fs ls

name: 128, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

文件系统创建完毕后, MDS 服务器就能达到 active 状态了,比如在一个单 MDS 系统中:

[root@ceph1 ceph]# ceph mds stat

e7: 1/1/1 up {0=ceph3=up:active}, 2 up:standby

active 是活跃的,另 1 个是处于热备份的状态

不同的挂载方式

client 端配置:

#创建挂载点 存储密钥(如果没有在管理节点使用 ceph-deploy 拷贝 ceph 配置文件)

[root@ceph3 ~]# cat /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = AQA6drpdei+IORAA7Ogqh9Wn0NbdGI/juTXnqw==

将如上 key 对应的值保存到客户端(/etc/ceph/admin.secret)

[root@ceph4 ceph]# cat /etc/ceph/admin.secret

AQA6drpdei+IORAA7Ogqh9Wn0NbdGI/juTXnqw==

要挂载启用了 cephx 认证的 Ceph 文件系统,你必须指定用户名、密钥。

[root@ceph4 ~]# mount -t ceph 10.200.51.4:6789:/ /opt/ -o name=admin,secretfile=/etc/ceph/admin.secret

查看挂载情况:

[root@ceph4 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 475M 0 475M 0% /dev

tmpfs 487M 0 487M 0% /dev/shm

tmpfs 487M 7.6M 479M 2% /run

tmpfs 487M 0 487M 0% /sys/fs/cgroup

/dev/mapper/centos-root 27G 1.6G 26G 6% /

/dev/sda1 1014M 136M 879M 14% /boot

tmpfs 98M 0 98M 0% /run/user/0

10.200.51.4:6789:/ 60G 16G 45G 26% /opt

取消挂载

[root@ceph4 ~]# umount /opt/

[root@ceph4 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 475M 0 475M 0% /dev

tmpfs 487M 0 487M 0% /dev/shm

tmpfs 487M 7.6M 479M 2% /run

tmpfs 487M 0 487M 0% /sys/fs/cgroup

/dev/mapper/centos-root 27G 1.6G 26G 6% /

/dev/sda1 1014M 136M 879M 14% /boot

tmpfs 98M 0 98M 0% /run/user/0